In this edition of The Photo-Realism Challenge, we will be dealing with light. The topic will be covered chronologically, and for the sake of simplicity, I will follow DirectX releases (OpenGL had a really big spell in the middle where it just wasn’t very good).

Before I begin, however, I wanted to address a question from Johnmiceter in the previous article: “So how do we get real life graphics . . .? Could the PS4 achieve close to real live images with its power?” The answer to the second question is “no.” The most powerful PC build cannot, using present techniques, produce fully photo-realistic images in real time. The PS4 is less powerful than this. So no, the PS4 will not be photo-realistic. It will be better than the PS3 (arguably much better), but it won’t be photo-realistic.

As to the first question, the answer is “change the underlying technology.” Current 3D games use a method called “rasterizing” to generate scenes. In a raster engine, everything is made of triangles (called polygons), and these polygon models are converted into pixels for display on your screen. If you can, go and pick a flower. Look at it really closely. How many tiny triangles do you suppose it would take to completely imitate it? Hundreds of thousands? Millions? More? It simply isn’t possible, and since a flower is rarely the focus of a scene, a good artist will “dumb down” the detail of the flowers (and the trees and rocks and whathaveyou) to give more polygons to the main actors. There are promising new techniques, such as voxel engines, that could maybe approach true photo-realism, but these are so CPU intensive as to be impractical for the foreseeable future. Sorry. Unless there is a radical shift in computing technology, we will forever approach reality, but we will never achieve it.

This is what voxel rendering looks like. No, it is probably not running in real time.

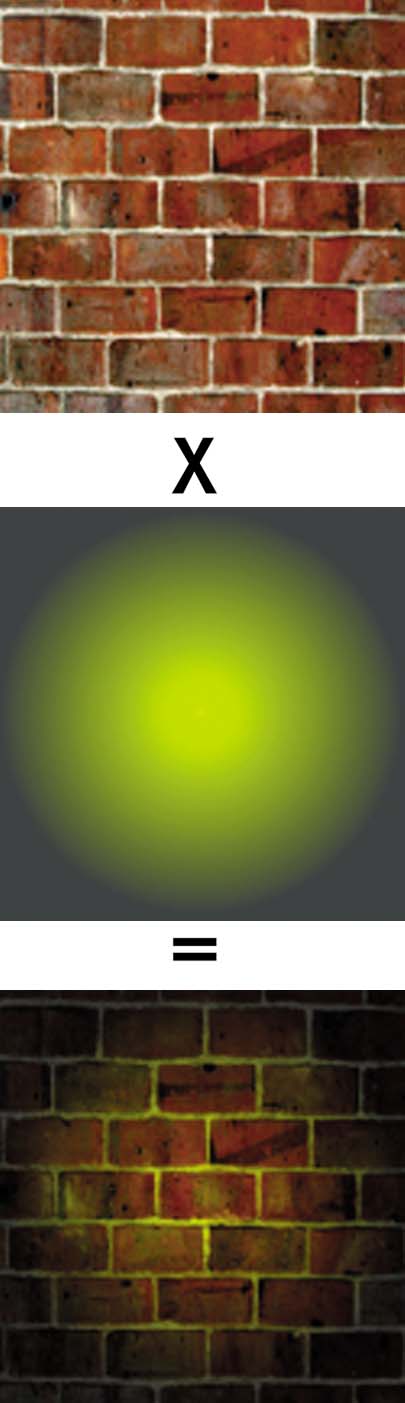

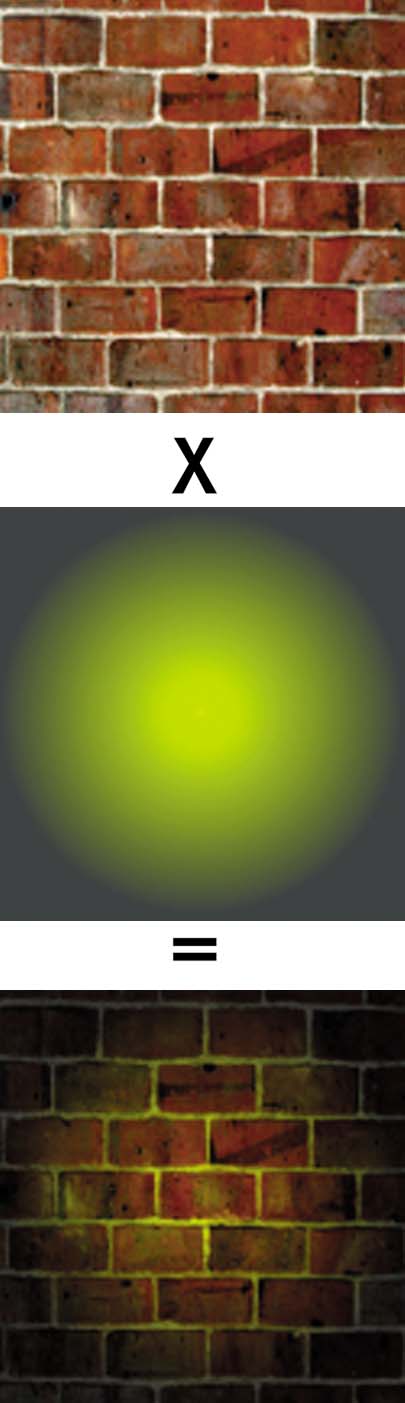

On to light! Waaaay back in the day (pre-DirectX 6 generation), games didn’t really have “light.” If you play one of these games, you will notice that everything is just kind of “lit up,” not bright and not dark. DirectX 6 brought us light in a “cheating” sort of way via lightmaps (lightmaps were around before DirectX, specifically in the Glide API, but who’s keeping track?). A lightmap is a second texture put onto a model that imitates light being displayed on it. These are pretty good at first blush, but pretty soon you start to realize that the light never moves (they are static) and that it’s just a texture. DirectX 7 brought us transform and lighting support, a technique for shading objects with light that has largely gone away due to better methods being developed.

This is what lightmapping looks like. Just in case you felt nostalgic.

By better methods, I mean shaders (DirectX

! In my previous article, I strongly mentioned how shaders revolutionized 3D gaming, but my emphasis was more on their role in simulating additional polygons. Their primary purpose was lighting, however. There are numerous lighting fuctions that shaders have performed. Some of these functions include light “shading” objects with its color (hence the name “shader”), volumetric lighting/ crepuscular rays (light rays from a single bright source, like the sun behind a cloud or Helios in God of War III), and light beam effects (Bioshock Infinite is a good example of this).

Crepuscular rays. I wanted a screenshot of Helios from GoW III, but I couldn’t find one that showcased it. Sorry!

In DirectX 9.0c, a major change was made in the lighting model. Previously, light sources were assigned a numerical value for their brightness, with 0 being fully dark and 1.0 being fully bright. So a really bright light (like the sun) would be given a value of 1.0, while a 40 watt lightbulb might be given a value of, say, .2. Reflective surfaces (water, mirrors, wet rocks, etc) were given a value for how much light they reflected. A mirror might reflect almost all light, a lake maybe 80%, and a wet rock perhaps 50%. But is this realistic? If the sun shines on a wet rock in real life, maybe it will lose 50% of its brightness. But 50% of really freaking bright is still really freaking bright. In a game, however, that wet rock is going to reduce the sun from 1.0 to .5. It isn’t very bright anymore!

To accommodate this, DirectX was adjusted to allow for much higher brightness levels. The sun could now be a given a stupid brightness like 100,000,000. It will still display as full brightness on a monitor, but when reflection and the like are taken into account, they will display properly. This is called High Dynamic Range Rendering (HDRR).

A decent before and after of HDRR. I like the example for bloom below better, though.

Another addition in DirectX 9 was bloom lighting (although it was simulated in Ico much earlier). When you take a picture with a bright light coming through a window, say, the light will “bleed” around the edges. This effect is caused by the diffraction of light in the camera. It isn’t really important why it happens, the point is, it happens. Bloom lighting enables game developers to simulate this light bleed.

Bloom, plus some really nice HDRR!

So what is the future? The first and biggest change to look forward to is particles. Lots and lots of particles. These small, light emitting points will allow developers to create more realistic looking fire, and will allow for neat light effects like light shining on dust particles

Particles. Wow. Just wow.

Another change applies to shadows. I could write an entire article on shadow rendering (maybe I will!), but for now, it is enough to say that the biggest problem with shadow rendering is the way light tends to “bleed” around things. Shadows in general are very hardware intensive, so newer hardware is always better. Newer video cards allows for Multi-View Soft Shadows, which more realistically simulate how light casts and influences shadows.

Notice how the shadows diffuse.

Groan inducing pun time: The future of 3D gaming is looking bright!

I’m so sorry.

-Thanks wololo for article